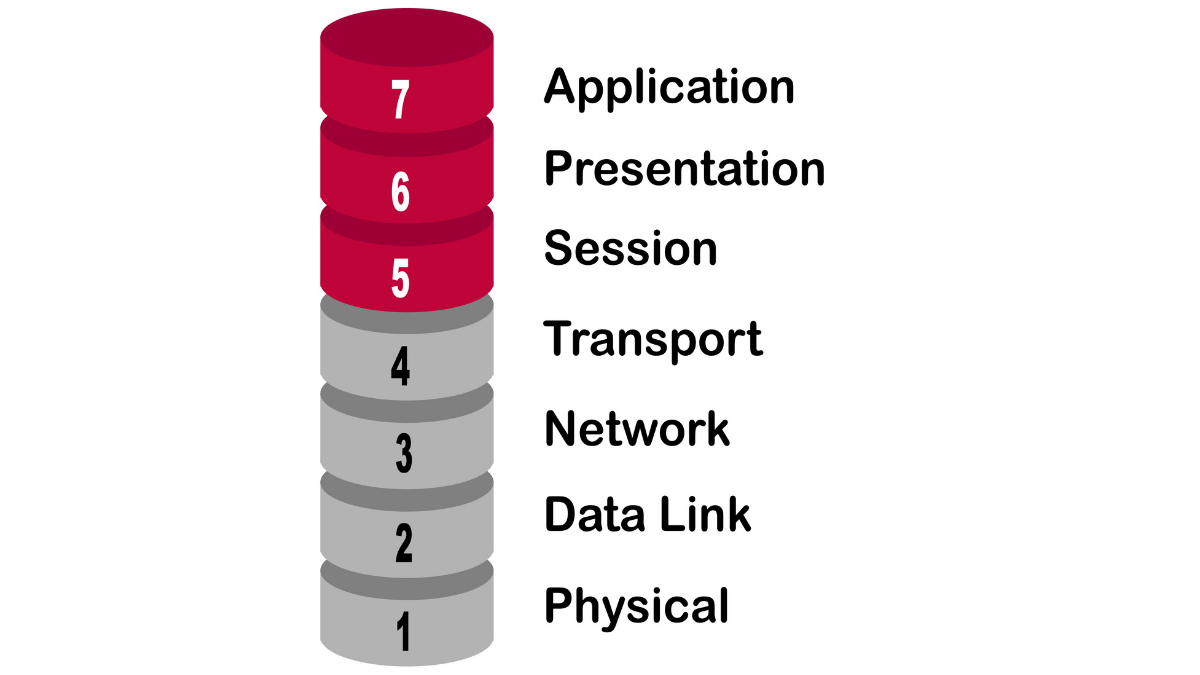

Developed by the International Organization for Standardization (ISO) in 1984 when network computing was at its nascent stage, the Open Systems Interconnection (OSI) model is the visual representation of how information interacts with different mediums—hardware and software components—within a network. OSI is a framework for vendors to standardize the interoperability between devices and applications so as to facilitate unrestricted data transmission.

A universal model that applies to all networking devices, OSI divides data into seven mutually independent layers of abstraction that are defined by unique network functions: physical, data link, network, transport, session, presentation, and application.

Layers of the OSI model

Physical layer

The physical layer handles the transmission of raw, unstructured bitstreams of data through a tactile medium. This layer encompasses the physical and electrical components that must be deployed within a network, such as:

Hardware equipment: These are physical devices such as hubs, ports, ethernet cables, plugs, and connectors, which act as intermediates to external networks.

Types of cabling: This is the type of hardware used for connecting the devices within a network. Some of the most prevalent types of cabling hardware include coaxial cables, fiber-optic cables, and shielded or unshielded twisted pair cables. Cabling determines the quality of transmission and the data’s susceptibility to transmission-related impairments like attenuation loss, distortion, and noise.

Transmission medium: This is the mode of propagation adopted by the incoming or outgoing information. There are two types:

Guided medium: Data is directed to the receiving devices via confined pathways such as cables and wires.

Unguided medium: Also known as unbounded medium, it utilizes space for data transmission and does not depend on a physical medium.

Network topologies: The interconnection of nodes, devices, and connections in a network corresponds to topology. Topology determines the direction of traffic within the network and helps organizations understand the different elements of their networks and their interlinking. Some of the most commonly used topologies are:

Point-to-point topology: It involves the linear connection between two devices.

Ring topology: In ring topology, each device is connected in a circular fashion. When a sender transmits data within the network, each computer acts as a repeater by amplifying the data signal until it reaches its receiver.

Star topology: This is a centralized form of network in which each device is connected to a central hub through which devices communicate.

Mesh topology: Mesh topology eliminates the need for a central hub as every device has dedicated pathways of connection to the other devices present in the network. During transmission, the other devices act as repeaters until the data reaches its destination.

Bus topology: Devices in bus topology are individually connected via coaxial or other network cables to a single linear cable known as the backbone network.

Hybrid topology: A combination of any two or more topologies constitutes hybrid topology.

Signals: To enable transmission, low-frequency data signals are embedded into high-frequency carrier signals in order to minimize the loss of the signal’s strength and make the signal more compatible with the transmission medium. The data and carrier signals can either be in analog or digital form.

Analog signal: Analog signals are continuous, time-varying waveform signals characterized by electromagnetic waves.

Digital signal: These are signals with discrete values that are represented by square waves or clock signals. Digital signals contain binary data (0s and 1s) and are easily understandable by a device’s circuitry elements.

The physical layer supports the conversion of analog into digital signals, known as modulation, and vice versa, known as demodulation.

Data link layer

The second layer of the model, the data link layer, provides a procedural or protocol-related framework for the exchange of data between multiple nodes in a LAN or the network nodes in a WAN. The data link layer receives incoming data bits from the hardware devices belonging to the physical layer and assembles them to form data link frames for further transmission. Besides that, the layer also plays an important role in troubleshooting errors that occur during the transmission of these bits.

The data link layer has two sub-layers:

Logical link control: This is the upper sub-layer of the data link layer that defines the logic for multiplexing, error control and troubleshooting functions, and flow control of traffic and allows multiport connections.

Medium access control: The medium access control (MAC) layer defines protocols to ensure that suitable data frames are transmitted to other networks via the physical medium. It further adds to error control by creating frame check sequences and also plays an instrumental role in identifying source and destination networks using a unique identifier known as the MAC address, a 12-digit (48-bit) hexadecimal number that is allotted to the network interface controller of a device. The MAC address consists of two parts: the 24-bit organizational unique identifier, which signifies the manufacturer, followed by the 24-bit vendor-assigned address. Using MAC addresses, networks can filter and incorporate devices that suit their user-defined requirements.

Network layer

The network layer is responsible for directing information to the right destination, also known as routing traffic. This layer introduces a logical addressing concept known as the Internet Protocol (IP) address, which, unlike the MAC address, is a variable identifier that points to the location of a host within the network. In the network layer, information is transmitted in the form of packets, and each packet consists of a header that contains the IP addresses of its sender and destination hosts. There are two types of IP addresses: the 32-bit IPv4 address and the 128-bit IPv6 address, among which the latter is widely used at present as the randomly assigned IPv4 addresses failed to accommodate newer networks after attaining a level of saturation.

Transport layer

The main objective of the transport layer is to ensure end-to-end delivery of data over a network by implementing flow and error control. The transport layer undertakes the responsibility of sequencing and de-sequencing individual units of data and oversees their transmission, which is followed by sending an acknowledgement to the sender node to notify that error-free data delivery has taken place. The protocol data unit in the transport layer is known as a segment, and the process of dividing data into segments, also known as segmentation, happens at the sender node.

The transport layer primarily features three protocols: TCP, UDP, and DCCP.

Transmission Control Protocol: TCP is a reliable, connection-oriented protocol that provides error control services to the transmitted data. It handles the segmentation and error-free transmission of data. Under TCP’s purview, an unsuccessful transmission of a data segment is subjected to retransmission.

User Datagram Protocol: Contrary to TCP, UDP is an unreliable, message-oriented protocol that is used to form low-latency, connectionless, and unacknowledged service that bypasses processing of delayed packets. UDP is used to transmit a low quantity of data.

Datagram Congestion Control Protocol: DCCP is a transport protocol that establishes congestion control mechanisms for connections. Congestion control allows the movement of a definite amount of data packets within a network, maintaining an optimal network response time. DCCP implements Explicit Congestion Notification to alert networks of network congestion and holds the packets until network-related issues are resolved.

The transport layer plays a crucial role in delivering latency-sensitive information, such as video conferencing and streaming of audiovisual content, which requires higher throughput for a seamless user experience.

Session layer

Supported by both half-duplex and full-duplex transmissions, the session layer sets up the active communication session between multiple devices. This layer creates, administers, and terminates the dialog, or session, between two nodes. Connections are established via authentication and authorization, with the former verifying the node’s identifiable credentials while the latter grants the required amount of access controls. A terminated session, caused by unprecedented network failure, can be recovered via the checkpointing services offered by the layer, along with adjournment and restart. Synchronization points can also be placed within the session layer, which allows multiple data forms to travel in unison with each other.

The session layer has applications in streaming and broadcast media, which require distinct mediums of data—audio and video—to be timecoded and displayed in a synchronous manner. Authentication systems, particularly the ones that utilize time-sensitive credentials like time-based one-time passwords, find operational support in the session layer. The session layer is widely used in webpages that require restoration and checkpointing of data.

Presentation layer

Also known as the translation layer, the presentation layer defines the format of data and its mode of encryption, making it easier for devices to consume data using appropriate applications. The presentation layer is also responsible for maintaining the appropriate syntax of the data it receives from or transmits to other layers within the model, which is why it’s also called the syntax layer. The presentation layer encourages interoperability amongst various encoding methods that are adopted by different nodes within a network. The basic protocols that constitute the presentation layer include:

Apple Filing Protocol: AFP is a proprietary network protocol; it offers file-based services exclusively for macOS platforms.

Lightweight Presentation Protocol: LPP delivers ISO presentation services over TCP- and UDP-based protocols.

NetWare Core Protocol: NCP is a Novell-designed network protocol that uses IP or Internetwork Packet Exchange. It offers users access to multiple network service functions including printing and sharing of files, a directory, clock synchronization, messaging, and remote command execution.

External Data Representation: XDR is a standard data serialization format that allows data to be transferred between two or more heterogeneous devices in a network. The process of converting locally represented data to XDR is called encoding, and the reconversion of XDR into local representation is called decoding.

Network Data Representation: NDR is a data encoding standard used to define or provide data types and representations. It is implemented in Distributed Computing Environment.

Secure Sockets Layer: SSL is an encryption-based security protocol that aims to establish a secure channel between nodes to facilitate a secure data transfer. It provides data security by encoding data and maintains data integrity throughout the transmission by establishing or authenticating connections by issuing SSL certificates via certificate providers. Once established, SSL certificates ensure a private connection for data transfer.

The presentation layer has wider applications in the area of data minimization, which requires data to be masked of its sensitive aspects that are vulnerable to malicious actions. This layer facilitates faster transmission by subjecting data to compression (encoding of data in such a manner that it consumes less memory), thereby decreasing the bandwidth and transmission time required for communication.

Application layer

An abstraction layer that provides an interface for applications to interact (request resources and data) over a network, the application layer consists of protocols and services that facilitate this communication. The basic protocols that contribute to the functioning of the application layer include:

Telnet: Short for “teletype network,” Telnet is an application protocol that provides bidirectional and interactive text-oriented communication using a virtual terminal connection. Telnet is used to configure and manage the elements of network hardware, such as routers, ports, and switches, via textual commands. It also provides access to the command-line interface of a remote server, which enables users to operate and manage remote networks.

File Transfer Protocol: FTP operates using client-server architecture to transfer files from a centralized server to other devices within a network.

Trivial File Transfer Protocol: TFTP uses the concept of UDP to share files from a server to its client. It requires minimal memory and is generally used for transferring files between nodes present within a local intranet connection.

Simple Mail Transfer Protocol: SMTP is a standard set of policies that define email communication.

Simple Network Management Protocol: SNMP is used to collect and manage information of managed devices connected to an IP network. It has a set of standards for endpoints to adhere to while interacting with other services of the network. SNMP is used to detect network faults and authorize unmanaged endpoints.

Domain Name System: DNS is a hierarchical, decentralized naming system used to define the identity of devices in an IP-based network. It assigns names to devices in a format that is easily understood by users.

Dynamic Host Control Protocol: DHCP follows client-server architecture to assign unique IP addresses and other configuration information (e.g., subnet mask or default getaway) to IP hosts prior to establishing a connection.

The application layer supports API-based operations to establish interoperability and compatibility between different applications used by hosts. This layer also supplements the functioning of email services in a network.

Pros and cons of the OSI model

The OSI model presents a generic standard that can be referenced by device manufacturers and network operators. Due to its uniquely defined layers, OSI aids in faster troubleshooting; security engineers can streamline mitigation by tracing the defective entity to its respective layer instead of having to check each layer for defects. OSI is an open-protocol suite that can also be implemented in connected as well as connectionless networks.

Despite its pros, OSI has a major disadvantage: its vastness. One of OSI’s counterparts, the TCP/IP model, offers compact, flexible architecture—TCP/IP contains four layers: physical, data link, network, and transport—and is scalable enough to fulfill the network demands of the present. Additionally, the complexity of the OSI model and its duplication of services—both the transport and data link layers have error control features—make it difficult for practical implementation, albeit it is still theoretically significant.

The future of the OSI model

With the IP suite still considered the most preferred and viable standard, and the emergence of software-defined networks, the OSI model has been nearly pushed into obsolescence. Despite its redundancies, OSI’s detailed classifications can be used as a basis to delegate tasks to an organization’s workforce. For instance: an application developer is operating at the presentation layer whereas a network engineer, responsible for overseeing IP-related actions of endpoints, operates at the network and transport layers of the organizational network. OSI layers can also be used as a yardstick to measure the scope and interoperability of an application or solution.